Brent Donnelly is a senior risk-taker and FX market maker, and has been trading foreign exchange since 1995. His latest book, Alpha Trader, was published last summer to great acclaim (by me, among others!) and can be found at your favorite bookseller. I think it’s an outstanding read, and not just for professional traders.

You can contact Brent at his new gig at bdonnelly@spectramarkets.com and on Twitter at @donnelly_brent.

As with all of our guest contributors, Brent’s post may not represent the views of Epsilon Theory or Second Foundation Partners, and should not be construed as advice to purchase or sell any security.

I was a pale, scrawny eight-year-old with long platinum hair, an Iron Maiden concert shirt and bad eczema. He was a chunky ten-year-old brick house named Dean Saunders. One lunch hour in 1980-something, out on the frozen tundra of my Ottawa elementary schoolyard, I made a foolish and daring offer.

“This whole, entire Crown Royal bag of marbles for your crystal beauty bonk. Yes or no?”

I knew I was better than him at marbles and I knew he was overconfident. He was also a large and scary kid. Like, a very small man.

I easily won the game of marbles (yay!), and when I stuck out my hand for the crystal beauty bonk, Dean Saunders slapped my hand away and said: “Fuck off.”

I immediately ran inside to Ms. Gillespie to appeal the violation, and she responded tersely:

“You should not be gambling at school, Brent.”

Oof.

Everyone has had that sinking “I am powerless here” feeling. Sure, the stakes were low, but the principle of the incident dug at me viscerally to the point where it still pops into my head at least once every five years or so.

Last week, I had that “I am powerless here” feeling again.

I was locked in my basement with symptomatic COVID, obediently following the CDC’s 10-day isolation guidelines. I had headaches and chills, but nothing serious. I was bored, so I filmed a YouTube video. The clip was a lofi, nerdcore rap recap of the year in US financial markets. It was jokey and harmless. It opened with:

“I’m locked in my basement with COVID, so I made this video for you.”

The rest of the content is light parody. It’s high rhyme density Seuss-meets-Eminem rap about 2021 financial events like Archegos, GameStop, and the boom in NFTs. The video got 1,400 views in two days and the feedback was friendly. You can view it here on Dropbox if you like, but you don’t really need to.

Then yesterday, I got this:

Wut? There was a bit more detail:

How your content violated the policy: YouTube doesn't allow content that explicitly disputes the efficacy of local health authorities' or World Health Organization (WHO) guidance on social distancing and self isolation that may lead people to act against that guidance.

Like Dean failing to pay off the marble bet, this is low stakes stuff. Who cares if the video has been disappeared by the corporate AI? Nobody, really. But like the marble bet, it gave me that sense of “This is very wrong, in an important but hard to explain kinda way.”

There is absolutely nothing nefarious going on in my video. But when computer says no… Computer says no. I clicked on the appeal link and got a reply a few hours later…

Computer says no.

Meanwhile, on Twitter someone has copied my profile (picture included) to impersonate me and DM people with a variety of crypto scams. I reported the scammer three weeks ago and he/she has been reported by dozens of my followers. A simple picture and word matching algorithm would conclude that the scammer is using my exact profile pic and has 5% of the followers. Simple, objective pattern recognition is the easiest task for an AI. But for whatever reason (minimize cost? maximize “active users”), Twitter can’t be bothered.

I am not a believer in complex conspiracies because I don’t believe complex organizations like government have the coordination or skill to execute elaborate ploys to control the public.

What I do believe is that artificial intelligence algorithms are making decisions all around us that are partially random, impossible to explain ex-post, sometimes wrong, and often dangerous.

What are the implications here? For me, in this case, zero. This is super low-stakes stuff. It does, however, give me a peek into how it might feel if this video was important to me or communicated something meaningful. This particular anecdote is meaningless on the surface but deeper down it gives me that creeping Bo Burnham Funny Feeling that things are not quite right.

If there is no human on the other end of the machine, what is the censored creator supposed to do? Three strikes and you’re out… Off YouTube forever. Cancelled. The stakes are high for businesses, streamers, and content creators. I’m at Strike One. At Strike Two, you are playing with your YouTube life. YouTube says 95% of those that get to Strike One never get to Strike Two. That makes sense because aggressive self-censorship obviously kicks in.

The issue of course is to find the impossible tradeoffs between:

- Online free speech absolutism where disinformation, intentional deception and lies, racism, pornography, copyright violation, antisemitism, foreign government interference and other types of fraud, bigotry, manipulation, and deception are rampant; or

- A marketplace of ideas that features only one idea. All content is filtered, censored, and boiled down to homogeneous “purity” where government information is correct, competing viewpoints on important subjects are verboten, and there is no practical avenue for public dissent.

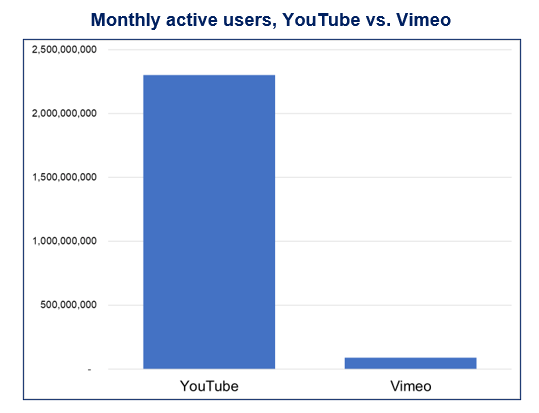

Why don’t I just suck it up and “vote with my feet”? If you don’t like YouTube, just switch to Vimeo or Dailymotion! YouTube/Google has competitors, right? Not really. I tried to leave Google once and go to Duck, Duck, Go. The search results were spotty and unreliable and I was back kneeling in front of Google a few days later. People tried it with Parler in 2020 and they’re trying it with Gettr now. But network effects are powerful.

Here is how network effects look in the video-sharing industry:

Monthly active users, YouTube vs. Vimeo

Network effects have dropped us into an era of winner-take-all tech capitalism where the unspoken (or sometimes spoken) goal of technology companies is to build a monopoly and then dance with (or capture) the regulator. It’s not like in the old media landscape where you could say “I don’t like NBC, I’m switching to CBS.” Old economy products, technology, and media had fewer or no network effects, so they were less likely to capture their customers and become near-monopolies along specific product lines. In many specific branches of tech and social media, there is now a singular best place to be. Nowhere else will do.

Society has inadvertently delegated ethical and moral decisions to these corporations and corporate algorithms even as those companies’ incentives are opaque or aligned toward shareholders, not the greater good. Poorly understood AI is filtering public discourse and selecting or rejecting via parameters that even its creators don’t fully understand.

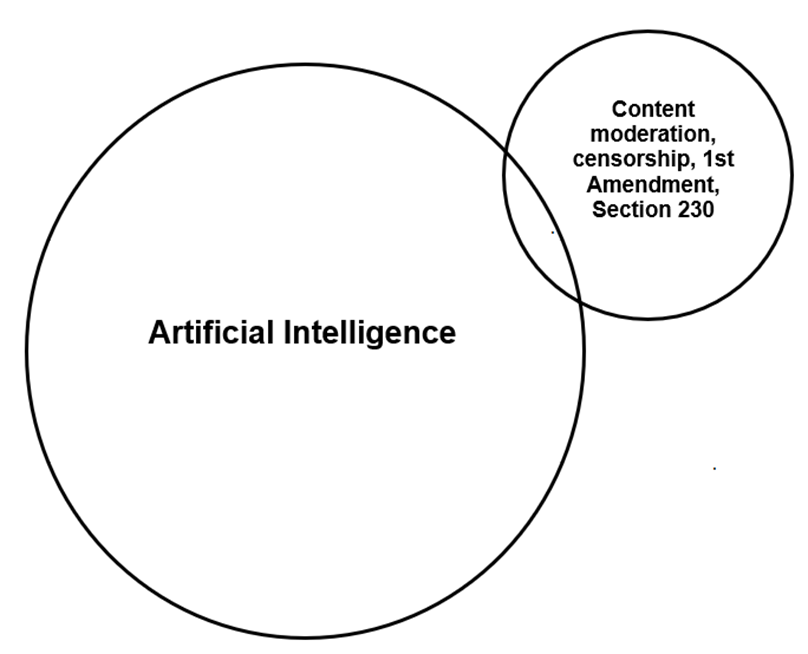

This is a two-level problem. The level one problem is: Who decides what content, or which creators will be censored? The much more important, level two problem is: Who should oversee AI? Its for-profit designers? Or elected lawmakers? China chose the government. So far, the US has made no choice at all and thus the corporations have been left in charge.

Two immensely important issues in our society

More important than crypto and Web3!

The tiny dot inside the intersection is me

What is the solution?

1) Leaders need to strongly and clearly assert that corporations do not write the laws of public discourse and censorship (explicitly or implicitly). Large technology companies do not own the debate. They should be allowed to participate with the understanding that they have technical skill but also massive conflicts of interest. Section 230 has given them all the power to amplify and censor for maximum profit, but almost none of the downside.

Even if you are anti-government, understand that someone has to make decisions on how AI and censorship exist in our society. Those decisions can be made by the Chief Revenue Officers of a few tech firms, by computer scientists, and by Joe Rogan. Or they can be made by democratically elected lawmakers. The choice to do nothing is in fact the choice to leave these important decisions almost entirely to the tech gatekeepers. Section 230 and the First Amendment are not working.

2) Content verification and censorship systems should be AI-enabled, not autonomous or pure AI. It should also be regulated. Even if it’s slower and more expensive, important corporate AI decisions should have cross-validation from humans. Much as one would not launch a rocket at a foreign city using only AI, seemingly low stakes decisions also need to have human validation or a human appeal process. Both content regulation and AI are enormously important and essentially infrastructure now, and should be regulated as such.

Any human would quickly see that my YouTube video does not contradict YouTube’s medical misinformation policy. An AI-driven decision backed by an AI-driven appeal process is not good. It is cheap to execute and thus optimal for the corporation, but it really sucks for society. I don’t truly care if YouTube needs to hire 40,000 people to do this job properly. And neither should you. Humans can’t do the job perfectly either, of course, but they look at the world and process information differently than AI. Humans are likely to make different and less-correlated errors compared to an AI checking the work of another AI. I don’t know if my appeal was seen by a human or an AI. YouTube doesn’t say.

3) More competition. This is an obvious solution but seems nearly impossible to achieve. If there was an analogy like CBS:ABC as YouTube:X… I could just go try X. But there isn’t. Maybe there is a competition law solution here but with network effects so dominant, I doubt it.

4) We need to take some small fraction of all the time we spend learning about and debating blockchain, crypto, and Web3, and re-allocate it to learning about and debating a much more important topic: Artificial Intelligence. That’s what I’m going to do. I’m starting with “The Age of AI” by Eric Schmidt, “Complexity” by Mitchell Waldrop, and “The Square and the Tower” by Niall Ferguson. Other recommendations are appreciated (but of course I have already seen “The Social Dilemma”).

This isn’t a low stakes question about some cool new technology like crypto or Web3. These are the highest possible stakes. The ultimate and potentially terminal fate of society could be determined by badly-designed or poorly-supervised algorithms. Profit-maximizing algorithms could turn us all against each other, turning up the temperature until the whole thing bursts into flame. You don’t just let AI run amok with no guard rails because you’re worried China might master it before you.

As social media simultaneously amplifies the loudest and most obnoxious voices, silences dissent, and feeds the roaring fires of political polarization and confirmation bias, the US is so fractured that serious commentators are now talking about once ludicrously far-fetched scenarios like US civil war and dissolution of the union. More AI oversight and regulation and better laws around content moderation and censorship are important and necessary.

As you see, my low stakes run-in with the YouTube robots has got me thinking about the critical importance of AI policy and regulation, especially as it relates to content moderation and censorship. And yes, I know these issues have been around for a long time and this essay could read a bit like me saying: “I just realized racism is bad! Let’s fix it!”

Hey, we don’t all wake up at the same time.

As I learn more about AI, its extreme complexity, and the difficult choices and tradeoffs that need to be made, I’m not optimistic. We can’t even find a way to efficiently distribute $2 plastic COVID tests to Americans, 24 months into a pandemic. Meanwhile, First Amendment absolutism is rampant, censorship is a cuss word, misinformation is literally killing people, and nobody fully understands what social media AI is doing to our society, not even the coders of the AI itself.

We can either let a few corporations make all the decisions on content and news dissemination as they optimize for low cost or maximum revenue (which means mollification of government or max outrage), or we can pass laws to make those decisions. There is no third option.

I’m not an expert on this topic and I’m eager to learn more so please send me links or other learning recommendations. Thanks for reading.

Brent

For more on this topic, this National Security Commission on Artificial Intelligence Report is a useful read.

Comments

I feel like I’m in the minority here when I say: “society” has to think about restrictions on free speech or we will destroy ourselves. Perhaps this seems histrionic, but on the current path we’re on, with mutual antipathy and distrust so high, substantial numbers of people believe things that are simply not true and cause actual harm to the general public by perpetuating those beliefs.

I feel like we are hidebound by the notion that almost any censorship is bad, something that is (IMO) a social feature of the 20th century but resting on a misunderstanding of an 18th century document. And I understand that then some entity or institution becomes an arbiter of the truth, but, as you point out, the harm is manifest so the social media companies are pursuing censorship anyway.

Answers are elusive, but I thought this piece was a good counter-argument to the two stances I normally hear.

I disagree. Put no limits on free speech; however, bring back responsibility for actions and inactions. You tend to self govern a lot better if you’re responsible for your own decisions. I don’t mean the tech censor, and I don’t mean #cancel culture. What I mean is those are your opinions. They don’t matter. What matters is when you make decisions. Those decisions should come with responsibilities and repercussions. Right now bad decision making does not come with a consequence, whereas opinions do. That needs to be inverted.

I’m of many minds about free speech limits/no limits.

How do you see this responsibility unfolding for free speech scenarios such as calls to arms or encouraging abhorrent and/or criminal behavior in a world of human frailty.

I am not sure that all bad decision making escapes consequence. I think mostly consequences are inevitable. Not always, but mostly.

Opinions made public get batted around in a public way. You express an opinion publicly, get eviscerated by the peanut gallery, it gets noticed.

Consequence seems to be a more private affair, many will not share misfortune publicly plus casualty can be difficult to prove even without denial being present. Self governance only exists if there is self awareness and it seems to me we have bred a 100% right 100% of the time mindset that it not conducive to the necessary self examination to produce that awareness.

Brent:

I do look for parables within our popular culture. Your piece reminded me both of “Brazil” ( so don’t assume you can project insignificance on chance incidents) and " I Robot" ( the robot calculates the odds of survival and let’s the kid die and saves the adult). As someone who generates content for a living I can see how the thought of a machine judging the intent or value of your work would be very unsettling to you so thanks for pointing that out .Hope I got your point right.

On free speech I am extremely hands off with very few limits. Harassment, inciting or threatening imminent violence, fraud. Those things are justifiable limits for me and subject to normal US court process with rights to defense etc. The idea of being hard to be found guilty of a violation of free speech is a feature, not a bug.

I’m looking more towards consequences of our leaders actions/inactions. A glance at today’s headlines today gives a few examples of actions/inactions that should lead to serious consequences but will end with nothing burgers: Boris Johnson for locking down everyone else while throwing elaborate parties for cronies. Insider trading at the Federal Reserve. Fauci covering up his involvement with Peter Daszak and squashing scientific inquiry into Covid’s origins. Powell’s entire “transitory” fraudulent narrative. There’s so much to add to this list. Our leaders’ actions only have negative consequences on the public, rarely are the leaders themselves negatively affected. The positive consequences are usually the inverse.

I think the approach here depends on which version of free speech you’re talking about. Is it free speech or Free Speech ? Because they’re different things. The former is the right of every American to criticize their government (or anything else) without fear of said government silencing them. The latter is the belief that you can say anything you want on any platform and live free and unencumbered by consequences. I think Free Speech

? Because they’re different things. The former is the right of every American to criticize their government (or anything else) without fear of said government silencing them. The latter is the belief that you can say anything you want on any platform and live free and unencumbered by consequences. I think Free Speech  is an unrealistic expectation that is used more often than not as a cover for people to attempt to get away with being jerks, or stirring controversy, or enriching themselves through some elaborate grift.

is an unrealistic expectation that is used more often than not as a cover for people to attempt to get away with being jerks, or stirring controversy, or enriching themselves through some elaborate grift.

The bigger problem is the one that’s embedded in the sort of meta issue that you’re (not incorrectly) concerned with. It’s the feeling that ‘somebody ought to be doing something about this’. I think we all feel that way to some degree. I’m as close to a free speech absolutist as you’ll find, but even I get that uncomfortable feeling when I think about the sort of insane shit that exists on Facebook. But now the embedded problem: if Facebook/Google/Twitter/et al are in the business of controlling the flow of information, and they are, then how do we categorize them in terms of what role they’re playing in society? Those companies all operate monopolies with the tacit blessing of the Federal government. Lots of people in government don’t like those monopolies and have the regulatory power to disrupt them. But if you’re too scared or too uninformed to use your power then do you really even have it? Breaking up Big Tech is the potential energy that’s stored in the D-cell battery that fell behind the couch and you forgot about two years ago. Maybe one day you’ll find it and put it to use, but for now it will always remain in the state of readiness without having anywhere to go.

is the potential energy that’s stored in the D-cell battery that fell behind the couch and you forgot about two years ago. Maybe one day you’ll find it and put it to use, but for now it will always remain in the state of readiness without having anywhere to go.

So if nobody is willing or able to regulate a thing then we have to say that whatever that thing is doing has the approval of said regulators. Right now, today, the United States government approves of what Facebook/Twitter/Google/et al are doing. Doesn’t that sort of make Big Tech a form of shadow government? Is DC really our nation’s capital or is Silicon Valley truly the seat of power?

a form of shadow government? Is DC really our nation’s capital or is Silicon Valley truly the seat of power?

From a purely corporate law standpoint Facebook should be able to determine what their users can say or do. It’s their toy and they can tell you how to play with it. But if Facebook is a quasi government enterprise–in operation but not in law–then does that change their right to control the content? If we view these companies as sovereign authoritarian states instead of as regular corporate actors how does that change the way our actual, real government treats them?

There’s no clean answer because we’ve just never been here before. These guys are all the East India Company. Once you’ve handed over the rights to control trade and colonization it’s hard to walk it all back in an orderly fashion.

I just listened to an interesting podcast interview (The New Abnormal E179 time 18:30-35:00) that appears to provide more illumination on this problem.

My summary understanding in a nutshell is that many (if not all) non-subscription “engagement by enragement” websites are primarily financed by advertising $$ channeled via GooG/Facebook. What is less known is the behind the scenes ad buying companies (“dark money” if you will) doing the directing of these $$.

Without these ad dollars supporting their “free speech”, these political grievance entrepreneurs, grifters and con artists might have to go get more socially beneficial jobs and a goodly chunk of the mis/dis information might dry up.

An unintended consequence of this, apparently, is that Mr/Mrs small biz owner buying ads through Facebook/Google might find their ads showing up on some website or embedded in some youtube video whose political philosophy/cause they do not support or agree with at all i.e. white supremacist, Qanon etc.

That sounds pretty interesting. When you think about the massive fortune that Facebook and Google have accumulated it’s useful to remember that it all started as a way to generate ad revenue. One of the things I repeat (seemingly endlessly) is that the point of any TV show, network or cable, isn’t to inform you or entertain you, it’s to sell Tide and Liberty Mutual Insurance. CNN and Fox run their dumb programming not because of some moral obligation, but instead as fan service to the marks who they hope will eventually spend a little extra money buying something made by Proctor & Gamble.

Continue the discussion at the Epsilon Theory Forum...