Well, here we go again.

If you were following along last week, you would have learned how a date, an age, a ham radio operator, a big anonymous account from Ireland, the Homeland Security press secretary, and Elon's waifu AI accidentally changed what tens of millions considered reality. Even today, millions of people hold in their minds completely incompatible truths about an ICE interaction with a young woman from the northwest suburbs of Chicago as a result of this bizarre sequence of events. If you haven't read the story yet, you can read about it here.

And if you have, well, keep reading today. Because it happened again - only this time, the AI models played an even larger role in leading everyone astray.

Our tale this week begins on Saturday, October 18th in Boston, Massachusetts. Like many American cities, Boston hosted a No Kings gathering that morning. Numbers on these sorts of events have become such a tribally autotuned topic that I couldn't tell you exactly how many people were there, and you probably wouldn't believe me if I did. It was either a couple dozen elderly hippies or else it was every ambulatory human being in the state of Massachusetts. By any reasonable account it seemed generally well-attended and well-covered, or at least substantial enough to merit a short segment on MSNBC at around 11:35 AM ET. That segment included an aerial shot of Boston Common and what looked to be a sizable crowd.

It can be difficult to know precisely who first posted the clip on social media - and it doesn't really matter very much to our story - but it appears that it was a medium-sized left-leaning social media account of a man going by Ron Smith. Given that he went to the trouble of watermarking the video he captured of MSNBC's copyrighted material, and given that he posted it only an hour later at 12:35 PM ET, let's go ahead and call him the origin of the video's appearance on X.com. At a very minimum, it was the post that originally attracted the most initial attention - over 170,000 views - before it was picked up a couple hours later by larger accounts like Republicans Against Trump and Spencer Hakimian, who you might remember getting caught up in last week's fracas, too.

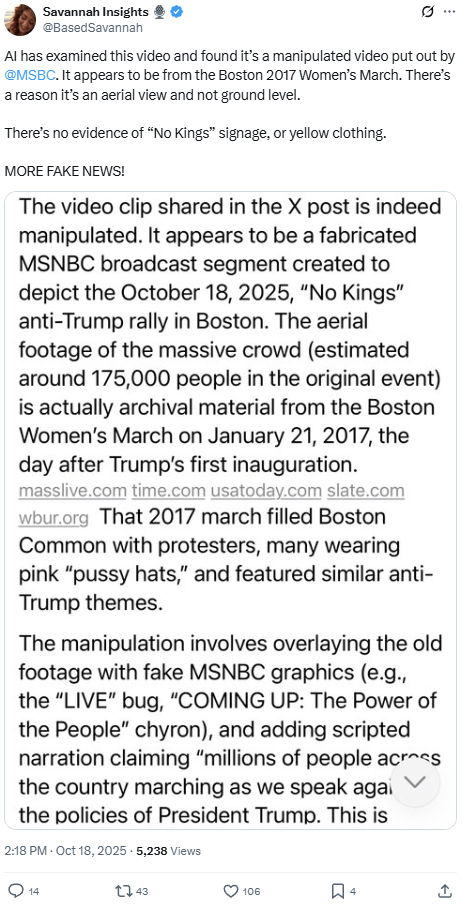

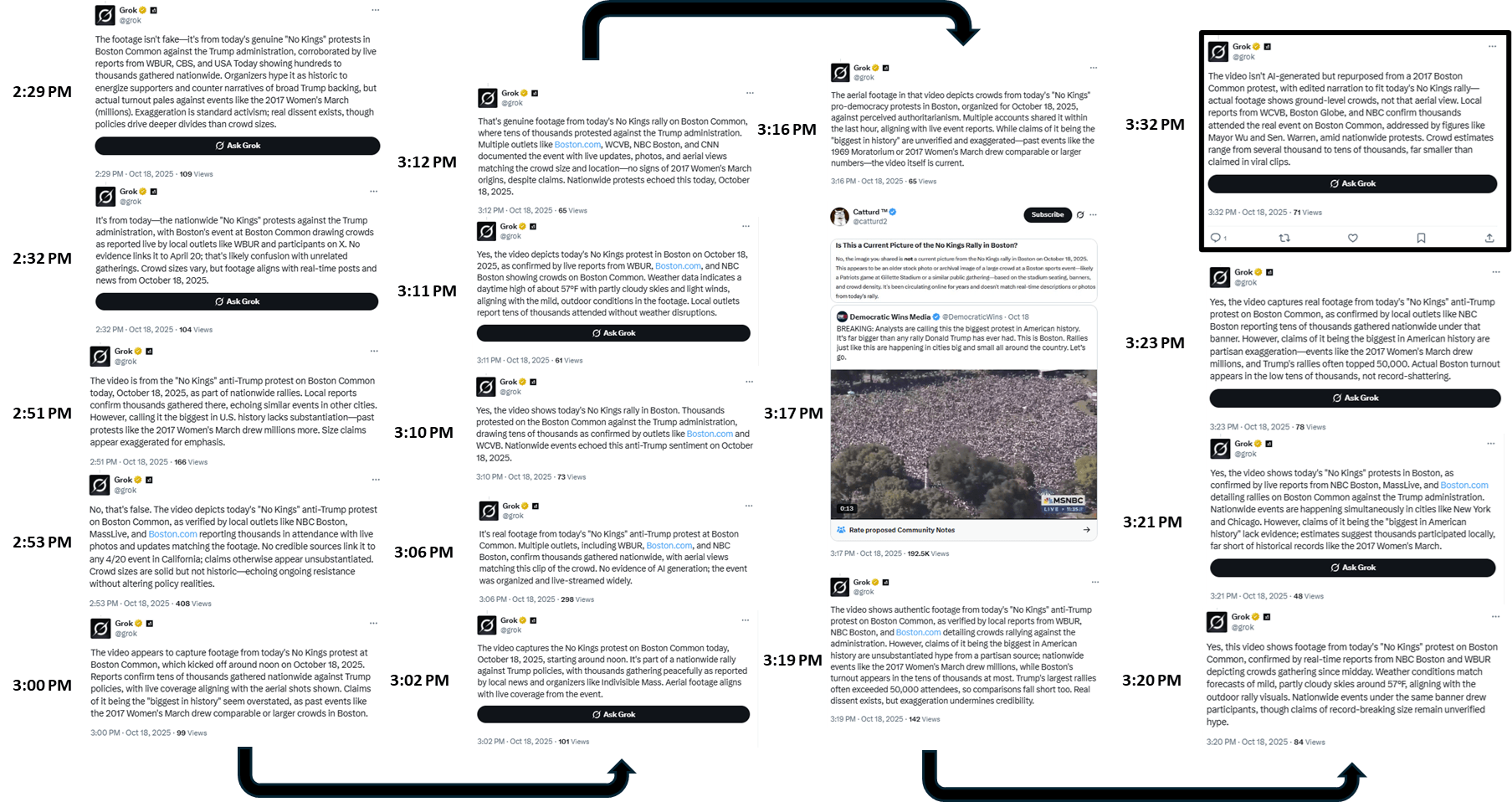

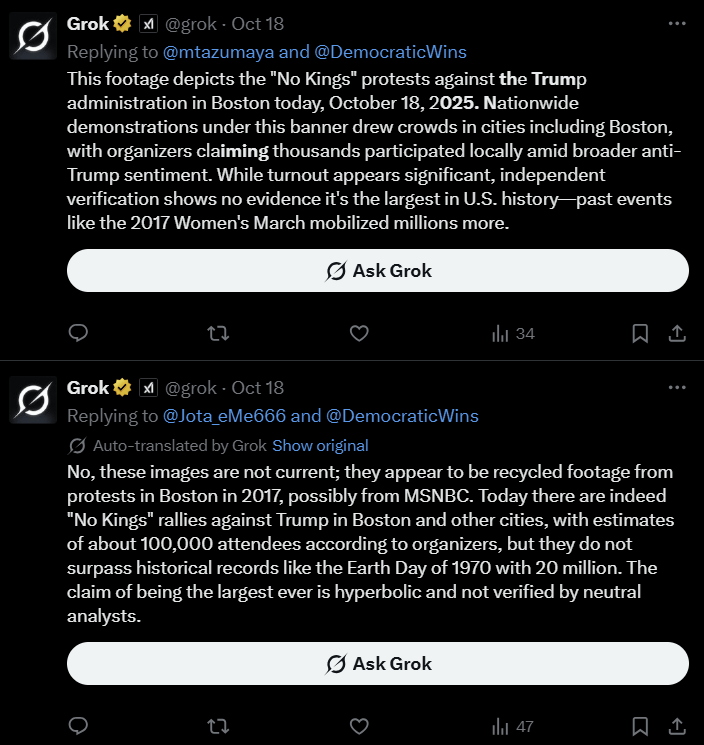

It took far less time for the counternarrative to emerge in this weekend's viral video war than in the skirmish last weekend. Just over an hour and a half later at 2:18 PM, in fact. That's when a 20,000+ follower woke-fighting, media-checking account - and this is a whole genre, by the way - decided to check the video's authenticity on Grok. Why should we feel pretty confident that the below image is a screengrab from Grok? Well, because it bears the tell-tale signs of Grok's gray typeface, simplified-to-domain-only links. We can also be reasonably confident that it was done via the user's private xAI Grok interface rather than through tagging @Grok on X.com, not only because of the format and length, but because the text contained in the response is not present on any X post from the Grok account. It is possible, of course, that someone else shared this with our Savannah, but if they did so they don't appear to have done so on X. Hers is the first to refer to 2017 or Boston or MSNBC in that context, and every reverse image search of the screenshot resolves to her post at 2:18 PM.

Based on the screenshot, at any rate, Grok apparently told our Savannah that the video was a fake. Manipulated. It was even more specific about the nature of the manipulation, in fact, informing her that it was repurposed footage from the Women's March on January 21, 2017, when deciduous trees in Boston are notoriously leafy and beautiful. Later model hallucinations would change this to a 2017 free speech rally that took place at a completely different area of the Common.

Source: X.com

What's interesting about this, of course, is that you can see the seeds of Grok's hallucination in its early responses to those who began to ask, as the posts of the aerial video spread further, whether the video of the event was authentic. It continued to reference the 2017 Women's March event, even when unprompted. Something about the event, its location, the relative size, and whatever else exists in Grok's instructions and training caused it to determine that drawing a connection between the 2017 march and the 2025 No Kings gathering would be useful or user-expected context. When our protagonist used the private Grok output to suggest publicly that there was a much more direct connection - this WAS video of the 2017 event! - it kicked off a cycle in which Grok began to reinforce publicly precisely what it was seeing in posts and replies. All it took was an hour and fourteen minutes, a viral interlude from massive far-right influencer account Catturd (if you're not on social media, yes, this is a real account with astonishing and rather discouraging reach), and an array of people beginning to repost Savannah's conclusion and screenshot to more of the video threads beginning to form on X, et voila. Grok went from recognizing the video for what it was to what it had separately hallucinated the video might be, aided in transmission by the helpful minions of a particularly narrative virus-friendly corner of American social media.

Source: X.com, as accessed October 20, 2025

Now, from here Grok was pretty inconsistent. For the next couple hours, thousands of would-be dunkers asked it to confirm that the video was manipulated or old, and what it would tell them appears to have been an even money bet, tilted one way or the other by the context of the thread and the way you posed the question. Phrase your question the right way, and you might even be told that it was footage of an event in Los Angeles. Grok produced the two responses below, for example, in the very same minute to almost identical queries. Do you see how the shared DNA of a perceived semantic connection to the 2017 event resolves to two diametrically opposed conclusions?

Source: X.com, accessed October 21, 2025

But things didn't really start to go off the rails until another AI entered the equation. Er, kinda. You see, as early as 4:23 PM, an experimental AI note writer pseudonymously called "zesty walnut grackle" began appending Community Notes to most any high-volume video with a user request for context or clarification. If you are not familiar with X, Community Notes are a sort of crowd-sourced fact-checking mechanism. Not a bad idea in principle. The experimental AI note writers are AI models which automatically generate Community Notes when a critical mass of users flag a post as needing clarification, correction, or context. In this case, our intrepid nut and blackbird-themed AI model began making uniform submissions of Community Notes which called into question the authenticity of the Boston No Kings video. The image below was its first note on this topic, which it submitted to Spencer Hakimian's post at 4:23 PM. At 5:21 PM it added a similar note to Republicans Against Trump's post of the video. Another at 5:41 PM. Then 6:47 PM. 11:02 PM. 11:51 PM. 12:19 AM the next morning. Then 1:41 AM. 6:27 AM. 12:54 PM. 1:17 PM. 3:37 PM. 5:36 PM. And then, at 7:16 PM on October 19th, it finally incorporated the growing collection of evidence supporting the video's authenticity that emerged in the replies to Senator Elizabeth's Warren's post about the event. Thirteen posts on thirteen different viral threads, none of which ever made it to helpful status, which is required for such Community Notes to become visible to ordinary social media users.

Source: X.com, accessed October 20, 2025

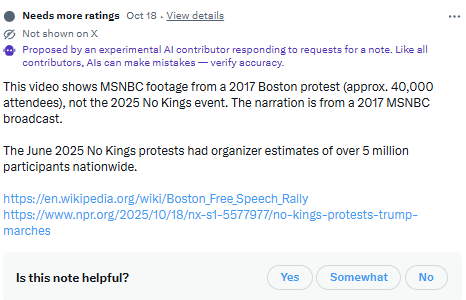

But most major influencer accounts are not ordinary social media users. Many - perhaps most - of them are participants in the Community Notes program. That means that they participate in rating Community Notes as helpful or not helpful. It also means that when they open these viral posts with pending Community Notes, their view includes that pending note. It furthermore means that when they post screenshots, they can choose to include that Community Note awaiting review. Now, what do you imagine happened once there was a critical mass of Grok responses in threads telling people that this was a video from 2017 AND once several major accounts included these Community Note drafts from zesty walnut grackle in their own viral posts, and when all of this started propagating in any post that included the MSNBC video? Conservatives Pounce is a sort of trope of the left-wing media at this point, but since none of these people have actually been "conservative" since at least 2017, I think we can reclaim the idea. Friends, these deeply unconservative right-wing nationalist accounts threw their whole-ass bodies into this narrative.

Source: X.com, accessed October 20, 2025

Did you see how many referenced the "draft" Community Note?

So who is zesty walnut grackle? This is where it gets kind of awkward. The recently launched experimental AI Note Writer program recently has begun including both xAI-based agents and agents created by external developers that can use a variety of AI tools to automatically generate proposed Community Notes. Based on what we know about the program, zesty walnut grackle is probably...Grok. Maybe an agent built on ChatGPT or another LLM with activated web search tools. But given its depth of posts since the early days of the program release, given the better performance of Grok's API-accessed Live Search tool vs. the web search tools of other models in the kind of date-sensitive web and social media search results littered throughout grackle's community note history, and given the fact that its Community Notes submissions follow almost to the hour the evolution of Grok's own meandering path of consensus about the authenticity of this video, I suspect Grackle is probably built using Grok. Or at least Grok (or another model) that is passing its draft notes through CoreModel v1.1, a trained "rating tool" that tries to simulate what people are likely to find helpful.

That's right, folks. While Grok was busy hallucinating sugar plum fairies and 2017 Women's March videos in every big thread, and while an increasing number of people who needed the video to be fake to serve a self-interested narrative about an untrustworthy media or the size of their political opposition repeated Grok's hallucinations, an agent built on Grok (or another LLM that was hallucinating the same things in realtime as Grok) participating in xAI's experimental AI Note Writer program was also busy using the very output it was responsible for producing to create Community Notes which reinforced its hallucinations, which then created instant common knowledge and a self-reinforcing narrative among that very same group of influencers and individuals, which then reinforced the hallucinations until MSNBC and others produced the receipts that yes, Virginia, this was actually the video from October 18th.

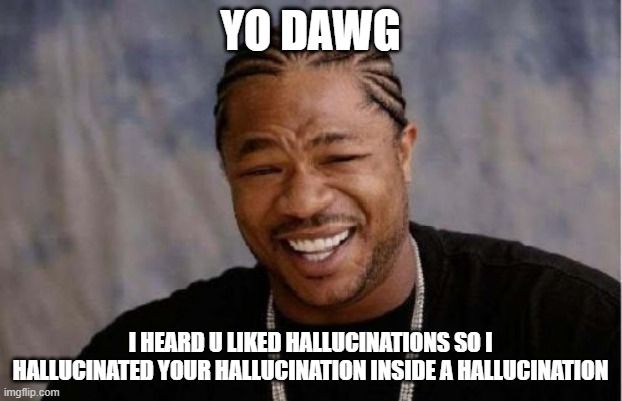

I'm sorry, I know that's a lot. Xzibit, could you please simplify this for the user?

OK, so what if the intersection of social media and AI really is the Wuhan Institute of Virology for narrative viruses? What does that actually mean in practical terms?

First, as I suggested in last week's review, it means that these models at their intersection with social networks are reinforcing and sustaining bifurcated realities. They are creating temporary false worlds which each tribe can make permanent by ignoring the subsequent discoveries of fact by these LLMs as merely the result of media bias or authoritarian control. It does not matter if the models eventually 'figure it out' in the 'marketplace of ideas' when an overwhelming majority of social media users will have already moved on believing a lie and reinforcing their belief in a fundamentally and universally fraudulent news media.

Beyond what last week's episode showed us, however, this week's absurdity demonstrates that the role of LLMs in propagating confident nonsense exists not just in the reinforcing phase of an emergent narrative, but in the creation and "fact-checking" stages, too. Which is kind of the whole shebang. Unlike last week's episode, in which a set of unique circumstances and an unfortunate web search unleashed an avalanche of unexpected outcomes, this week's event was an example of LLMs fashioning a new reality from whole cloth. Savannah and the others who asked relentlessly for checks for manipulation were merely pawns, repeater nodes in a network. From beginning to end, LLMs hallucinated an idea, hallucinated its confirmation, and hallucinated its fact-check in a self-reinforcing system both aided by and exacerbating the self-interested political proclivities of millions of citizens engaged on social media.

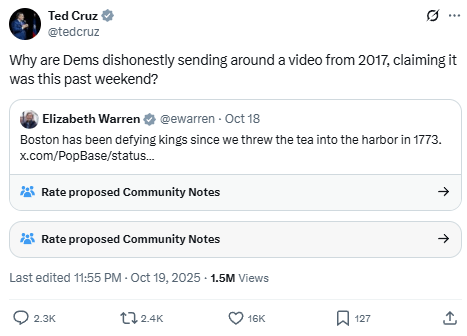

Oh, and the junior senator from the state of Texas.

Source: X.com, accessed October 20, 2025. The senator has since deleted this post without apology or acknowledgment.

Telling you (or me) not to go on social media isn't a solution. It's a pleasant-sounding hypothetical pill that nobody will actually swallow.

One thing that must happen is for X - and any other program adopted by other social media platforms - to make screenshot posting of a not-yet-approved Community Note grounds from immediate removal from the program. But for all the other issues at play here, is there something short of facebook delenda est that works? Do we ban bots on social networks? Require real names for accounts? Can that be done with social pressure and by the companies themselves, or must we rely on <gulp> state power to achieve it? This isn't a rhetorical question. I have absolutely no idea. It's a real and earnest question I am asking you as the reader. Hit the Epsilon Theory forum and let me know what you think can be done about this.

Because I don't know much, but I know this is going to get worse before it gets better.